Paragraph Pollution: AI is (probably) greener than you typing on a laptop

When you use ChatGPT, are you worried about a potentially massive environmental cost? You probably shouldn't be.

While it's a familiar worry, as it's something we applied to other new innovations like fast fashion or cryptocurrencies, we’d make the case that it’s emphatically not true for generative AI tools. In fact, using AI is actually more environmentally friendly than the alternative – simply typing on a laptop.

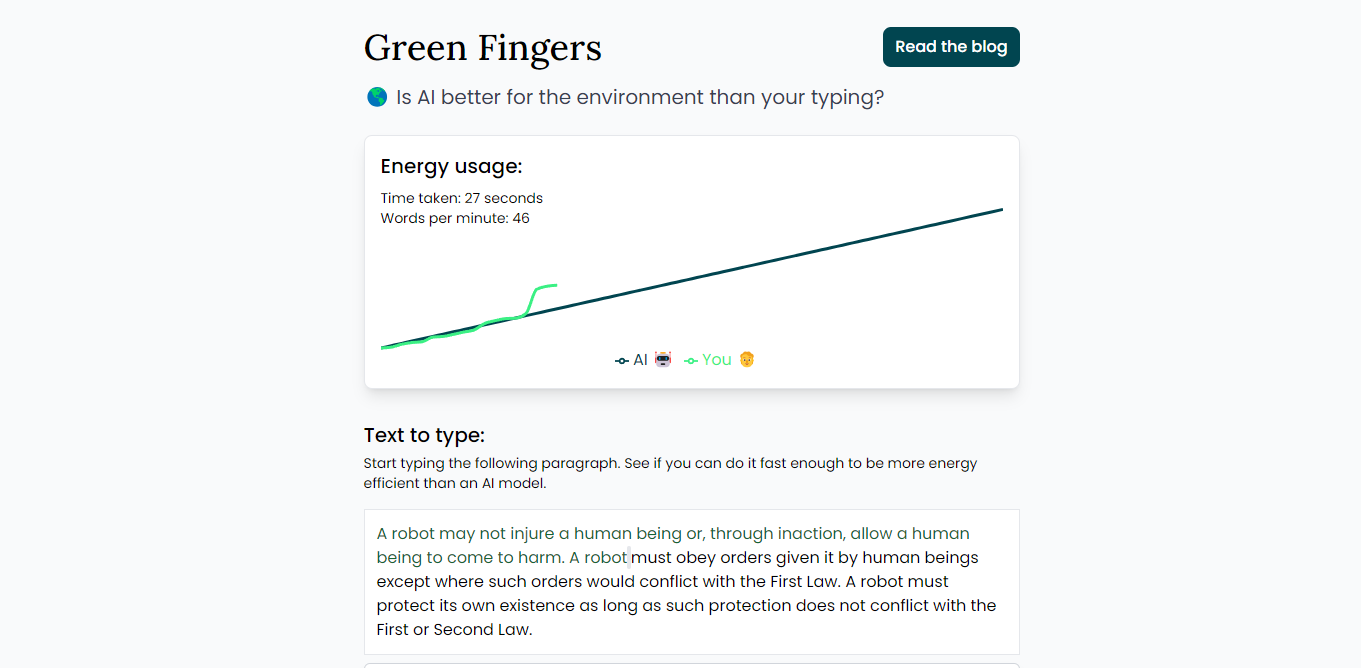

You can read the next few paragraphs to follow our maths, or you can skip straight to our “Green Fingers: You vs AI” challenge.

The carbon footprint of a paragraph (in a paragraph)

What’s the cost of producing ~100 words? You’ve got 2 kinds of effects: 1) the energy used to create the words, and 2) the energy used to produce the “writing materials”. That second cost could be the cost of manufacturing a pen, a laptop, a papyrus tablet, or of training a Large Language Model. That cost might vary dramatically, but crucially, it doesn’t change when you write one extra paragraph. For the marginal cost of a paragraph, we can look at 2 directly comparable things: the energy used by ChatGPT to type it for you vs the power used by your laptop battery while you type.

1) ChatGPT’s energy usage

AI companies have not generally published the energy used to generate text with their models. However, we can make an approximation from the price OpenAI charges to use their AI.

As of right now, the model used by ChatGPT charges $0.0015 per 1000 output tokens (roughly equivalent to 700 words). That means the price of generating a 100 word paragraph is ~$0.00025.

We’ll assume that AI models have roughly the same split of operating costs as a typical data centre. From this US Chamber of Commerce report, we get that 40% of the operating cost of a data centre is power. To be conservative, we’ll assume that OpenAI is passing on 100% of the cost to users and is making 0% operating profit. That gives us an electricity cost of generating 100 words of $0.0001.

We can roughly convert this into an electricity-used figure by taking the average cost of industrial energy in America (9.5 cents / Kilowatt-hour). That means our $0.0001 is getting us 0.0011 Kilowatt-hours. Or put alternatively: enough to make 3% of a cup of tea.

2) Your laptop’s energy usage

In contrast, this is extremely easy to work out. You take the wattage of your laptop and multiply it by how long you’re typing for. For an average laptop, that’s 50 watts. For an average person, that’s 40 words per minute. That means typing a 100 word paragraph would take them 2 and a half minutes, and uses 0.0021 Kilowatt-hours in energy

In short, the average person typing on a laptop is twice as bad for the planet as using ChatGPT.

But of course, that’s just the average. Maybe you type fast enough to be more energy efficient than an AI? For a bit of fun, you can put that to the test here.

The future:

Even if you can currently type fast enough to hold your own against the AI in energy efficiency, the situation is changing rapidly. OpenAI decreased its prices by 25-50% earlier this month. This follows a 90% cost decrease last March. There's also other alternatives, like Mistral, that are even cheaper than the latest OpenAI prices. There's even ways to run an AI model from your own phone (without needing a big server somewhere in a data centre).

Large Language Models are going the way of the dishwasher. Their machine-ness means they intuitively feel like they should be bad for the environment, but in reality they're just more efficient than their manual or human equivalent.

And the efficiency of these models is improving so quickly, this blog post really only makes sense this month. In no time at all, this conclusion will be obvious.

Notes: for a bit more detail on some of these assumptions, as well as an analysis of the up front training cost, see a follow up post.